Creating a Wordcloud

Introduction

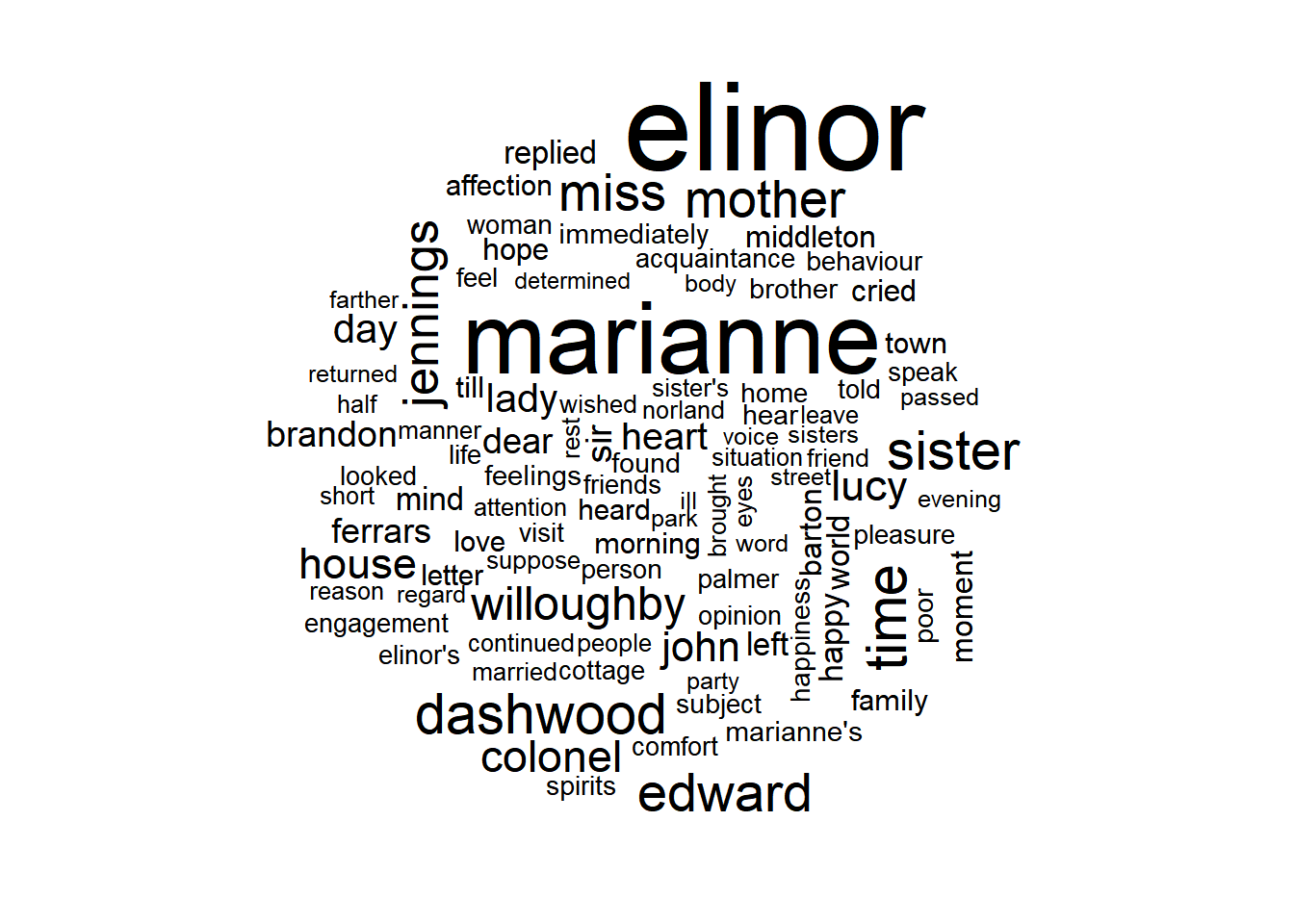

A wordcloud is a common visualization to show how often a word is repeated in a set of text. The words appear on the image and the larger the word, the more common it is in the text. In this example, we will be evaluating Jane Austen’s novel “Sense & Sensibility”, which is available via the R package janeaustenr.

Gathering and Cleaning the Data

First, we will import our libraries and using the austen_books() funtion from janeaustenr, filter out the book “Sense & Sensibility”.

library(dplyr)

library(stringr)

library(tidytext)

library(janeaustenr)

#gather all the books available from the janeaustenr package

sns<-austen_books()

#filter out the book we want to analyze

sns<-sns%>%

filter(book == 'Sense & Sensibility')

print(sns, n=20)## # A tibble: 12,624 x 2

## text

## <chr>

## 1 SENSE AND SENSIBILITY

## 2

## 3 by Jane Austen

## 4

## 5 (1811)

## 6

## 7

## 8

## 9

## 10 CHAPTER 1

## 11

## 12

## 13 The family of Dashwood had long been settled in Sussex. Their estate

## 14 was large, and their residence was at Norland Park, in the centre of

## 15 their property, where, for many generations, they had lived in so

## 16 respectable a manner as to engage the general good opinion of their

## 17 surrounding acquaintance. The late owner of this estate was a single

## 18 man, who lived to a very advanced age, and who for many years of his

## 19 life, had a constant companion and housekeeper in his sister. But her

## 20 death, which happened ten years before his own, produced a great

## # ... with 1.26e+04 more rows, and 1 more variables: book <fctr>Looking at the data, we can see that there are some words we don’t want to include, such as the title for each chapter. We know that the word “Chapter” occurs for each chapter, so let’s eliminate that by using stringr’s function str_detect() and a basic regular expression.

sns<-sns%>%

filter(!str_detect(sns$text, "^CHAPTER"))Wordclouds need to count the words, not lines of text, so next we have to split apart each line of text into words. The tidytext package provides a function called unnest_tokens() which does exactly that. In short, it splits a line of text using a blank space as the seperator. This can result in some of the words to be numbers, which you may or may not want to exclude later on.

# word is the column the word will go in

# text is the column from sns that will be parsed

words<-sns%>%

unnest_tokens(word, text)

print(words, n=20)## # A tibble: 119,857 x 2

## book word

## <fctr> <chr>

## 1 Sense & Sensibility sense

## 2 Sense & Sensibility and

## 3 Sense & Sensibility sensibility

## 4 Sense & Sensibility by

## 5 Sense & Sensibility jane

## 6 Sense & Sensibility austen

## 7 Sense & Sensibility 1811

## 8 Sense & Sensibility the

## 9 Sense & Sensibility family

## 10 Sense & Sensibility of

## 11 Sense & Sensibility dashwood

## 12 Sense & Sensibility had

## 13 Sense & Sensibility long

## 14 Sense & Sensibility been

## 15 Sense & Sensibility settled

## 16 Sense & Sensibility in

## 17 Sense & Sensibility sussex

## 18 Sense & Sensibility their

## 19 Sense & Sensibility estate

## 20 Sense & Sensibility was

## # ... with 1.198e+05 more rowsAfter that, we want to exclude any other commonly used words. These are called stop words, and include words like “a”, “and”, “the”. There are several others included in a dataframe provided by tidytext called stop_words. Using dplyr, we can filter these out quite easily.

words<-words%>%

filter(!(word %in% stop_words$word))Grouping and Summarizing the Data

Now that we have our data split up into words, we need to count how many times a word is used. Using dplyr, we can create a new dataframe to group by the word, and then count it.

wordFreq<-words%>%

group_by(word)%>%

summarize(count=n())Creating the Wordcloud

Creating a wordcloud is quite simple once the library has been imported. All we need to do is pass in the word, and the number of times it occurs. We can then configure how many words will be shown, or how many times the word has to appear in order to include it. In this example, there are so many used words, that the wordcloud takes a long time to generate, so we are limiting it to the top 100 words used.

library(wordcloud)

wordcloud(wordFreq$word, wordFreq$count, max.words=100)

The Code

The full code for this article can be found on my GitHub Gist Page.